SSH is the preferred (perhaps de facto) remote login service for all things UNIX. The old-school remote login was telnet. But telnet was completely insecure. Not only was the confidentiality of the session not protected, but the password wasn’t protected at all – not weak protection – no protection.

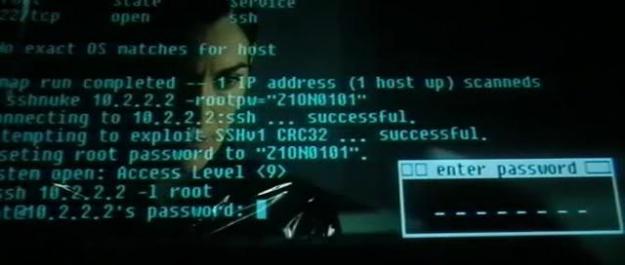

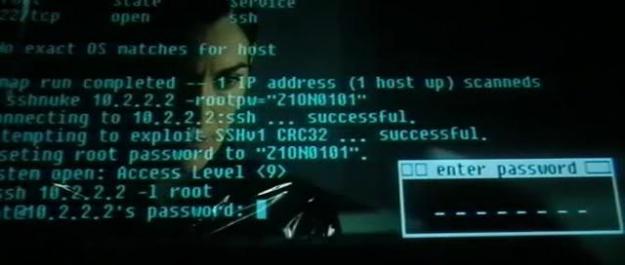

And so SSH (aka Secure Shell was developed)… But it has not been without its failings. There are two “flavors” for SSH: Protocol 1 and 2. Protocol 1 turned out to have pretty serious design flaws. The hack of SSH using the Protocol 1 weaknesses was featured in the movie Matrix Reloaded. So, by 2003, the flaws and the script kiddie attack were understood well enough to have the Wachowski Brothers immortalize them.

And so SSH (aka Secure Shell was developed)… But it has not been without its failings. There are two “flavors” for SSH: Protocol 1 and 2. Protocol 1 turned out to have pretty serious design flaws. The hack of SSH using the Protocol 1 weaknesses was featured in the movie Matrix Reloaded. So, by 2003, the flaws and the script kiddie attack were understood well enough to have the Wachowski Brothers immortalize them.

Another concern to watch out for is that SSH has port-forwarding capabilities built into it. So, it can be used to circumvent web proxies and pierce firewalls.

All in all though, SSH is very powerful and can be a very secure way to remotely access either the shell or (via port forwarding) the services on your host.

For additional information on SSH’s port-forwarding capabilities:

Be aware that SSH is part of a family of related utilities; check out SCP, too.

Configuration

After installing the SSH server (perhaps: apt-get install openssh-server), you will want to turn your attention to the configuration file /etc/ssh/sshd_config

Here are a few settings to consider:

Protocol 2

PermitRootLogin no

Compression yes

PermitTunnel yes

Ciphers aes256-cbc,aes256-ctr,aes128-cbc,aes192-cbc,aes128-ctr

MACS hmac-sha1,hmac-sha1-96

Banner /etc/issue.net

- The “Protocol” setting should not include “Protocol 1”. It’s broken; don’t use it.

- PermitRootLogin should never be “yes” (so, of course that is the default !). The best option here is “no”, but if you need or want to have direct remote root access (perhaps as a rescue account), then the “nopwd” option is better than “yes”. The nopwd option will force you to set up and use a certificate to authenticate access.

- Unless your host’s CPU is straining to keep up, turn on compression. Turn it on especially if you are ever using a slow network connection (and who isn’t).

- If you are not going to access services remotely using SSH as sort of a micro-VPN, then set this to “off”. Because I use the tunneling feature, I have it turned on.

- OK; I work and consult on cryptographic controls, so I restrict SSH to the FIPS 140-2 acceptable encryption algorithms.

- Likewise, I restrict the Message Authentication Codes (MACS) to stronger hashes.

- Some jurisdictions seem to not consider hacking a crime unless you explicitly forbid unauthorized access, so I use a banner.

Sample Banner

It seems that (at least at one point in the history of law & the internet) systems which did not have a login banner prohibiting unauthorized use may have had difficulty punishing those that abused their systems. (Of course, it is pretty hard to do so anyway, but…) Here is the login banner that I use:

* - - - - - - - W A R N I N G - - - - - - - - - - W A R N I N G - - - - - - - *

* *

* The use of this system is restricted to authorized users. All information *

* and communications on this system are subject to review, monitoring and *

* recording at any time, without notice or permission. *

* *

* Unauthorized access or use shall be subject to prosecution. *

* *

* - - - - - - - W A R N I N G - - - - - - - - - - W A R N I N G - - - - - - - *

Account Penetration Countermeasures

Within hours of establishing an internet accessible host running SSH, your logs will start to show failed attempts to log into root and other accounts. Here is a sample from a recent Log Watch report:

--------------------- SSHD Begin ------------------------

Failed logins from:

58.222.11.2: 6 times

211.156.193.131: 1 time

Illegal users from:

60.31.195.66: 3 times

203.188.159.61: 1 time

211.156.193.131: 3 times

Users logging in through sshd:

myaccount name:

xx.xx.xxx.xx: 3 times

---------------------- SSHD End -------------------------

One of the most effective controls against password guessing attacks is locking out accounts after a predetermined and limited number of password attempts. This has a tendency to turn out to be a “three strikes and you’re out” rule.

The problem with applying such a policy with a remote service, like SSH, as opposed to your desktop login/password, is that blocking the password guessing attack becomes a Denial of Service attack. Any known (or guessed) login ID on the remote machine will end up being locked out due to the remote attacks.

Enter Fail2ban: Rather than lock out the account, Fail2ban blocks the IP address. Fail2ban will monitor your logs, and when it detects login or password failures that are coming from a particular host, it blocks future access (to either that service or your entire machine) from that host for a period of time. (Oh, and you may notice I said blocks access to the “service”, and not “SSH” – that’s because Fail2ban can detect and block Brute Force Password attacks against SSH, apache, mail servers, and so on…)

How to Forge has a great article on setting up Fail2ban – Preventing Brute Force Attacks With Fail2ban – check it out.

One tweak for now. As I tend to use certificate authentication with SSH (next topic), I rarely am logging in with a password. As a result, I tend to use a bantime that is long, ranging from a few hours on up. Three guesses every few hours really slows down a Brute Force Attack! Also, check out the ignoreip option, which can be used to make sure that at least one host doesn’t get locked out. (You can lock yourself out with Fail2ban… I have done it…)

SSH Certificate Based Authentication Considerations

Secure Shell offers the ability to use certificate based authentication with a self-signed certificate. There are two ways you might consider using this:

- With a password protecting the private key

- With no password required

Please note: When you establish certificate based authentication with SSH, you will generate a public/private key pair on your local computer. The public key will only be copied up to the server which you wish to access. The private key always stays on your local computer.

During the process of generating the private and public key pair, you will be asked if you want to password protect the private key. Some things to consider:

- Will this ID be used for automated functional access ?

If you are creating the certificate based authentication so that a service can access data or run commands on the remote machine, then you will not want to password protect the local file. (If you do, you will end up including the password in the scripts anyway, so what would be the point?)

Personally, I have backup scripts which either pull data or snapshots on a regular basis. Google “rsync via ssh” for tips on this, or “remote commands with ssh” for tips and ideas. (Also, I may cover my obsessive compulsive backups in a later post.)

- This ID will be used for a rescue account

In this case the certificate is usually created to avoid password expiration requirements. If it is a rescue account, it often logs into root. Any time you use certificate access for root, the private key should be password protected. Rescue accounts are often stored on centralized “jump boxes” and are expected to only be used during a declared emergency of some kind (such as full system lockout due to a password miss-synchronization.)

These private keys should always be password protected.

If someone has access to backups or disk images of the jump box, or otherwise gets access to your .ssh directory, and you have not password protected the private key, then they own the account (e.g., they can use the public/private key pair from any box).

- Convenient remote logons…

The most common use of certificate based authentication for SSH is in fact to log you into the remote box without having to type passwords. (I do this, too…) But there are a few things to think about (these are all good general recommendations, but I consider them requirements when using an automated login…)

- Automatic login should never be used on a high-privilege account (e.g., root)

- If those accounts have sudo privileges, sudo should require a password

- A new certificate (public and private key pair) should be created for each machine you want to access the remote server from (e.g., desktop, laptop, etc.). Do not reuse the same files.

- The certificate should be replaced occasionally (perhaps every 6 months).

- Use a large key and use the RSA algorithm option (e.g., ssh-keygen -b 3608 -t rsa)

SSH Certificate Based Authentication Instructions

So, without further ado… Let’s set up a Certificate for authentication.

Part 1 – From the client (e.g. your workstation, etc…)

First, confirm that you can generate a key.

$ ssh-keygen --help

The options that are going to be of interest are:

- -b bits Number of bits in the key to create

- -t type Specify type of key to create

DSA type keys, you will note, have a key length of exactly 1024. As a result, I choose RSA with a long key. My recommendation is that you take 2048 as a minimum length. I am pretty paranoid, and I have a strong background in cryptography, but I have never used a key longer than 4096.

The longer the key, the more math the computer must perform while establishing the session. After the session is established, then one of the block-ciphers discussed above performs all of the crypto. If you are making a key for a slow device (like a PDA) or a microcontroller based device, then use a shorter key length. Regardless, actually changing the keys regularly is a more secure practice than making a large one that is never changed.

$ ssh-keygen -b 3608 -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/Users/erikheidt/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /Users/erikheidt/.ssh/id_rsa.

Your public key has been saved in /Users/erikheidt/.ssh/id_rsa.pub.

The key fingerprint is:

43:69:d8:8e:c4:af:f8:8b:5a:2d:db:75:91:fd:06:be erikheidt@Trinity.local

The key's randomart image is:

+--[ RSA 3608]----+

| |

| . o . |

| + = |

| . * o |

| . S o o |

| o . . o o |

| + o . . . o |

| . * . . o |

| ..o +. E |

+-----------------+

Now, make sure your .ssh directory is secured properly…

$ chmod 700 ~/.ssh

Next, you need to copy the public key (only) to the server or remote host you wish to login to.

$ cd ~/.ssh

$ scp id_rsa.pub YourUser@Hostname

Now we have copied the file up to the server….

Part 2 – On the Server or remote host….

Logon to the target system (probably using a password) and then set things up on that end…

$ ssh YourUser@Hostname

$ mkdir .ssh

$ chmod 700 .ssh

$ cat id_rsa.pub >> ~/.ssh/authorized_keys

Done ! Your next login should use certificate based authentication !

I hope this posting on SSH was useful.

Cheers, Erik

You must be logged in to post a comment.